Data compression

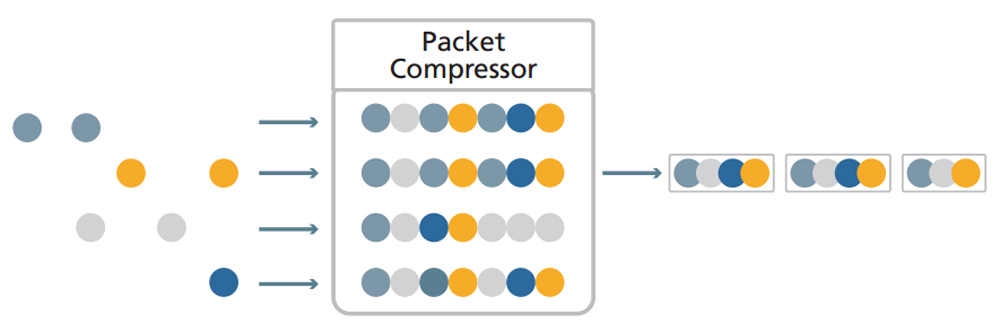

In signal processing, data compression, source coding or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is called an encoder, and one that performs process reversal (decompression) is called a decoder.

The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding; coding done at the source of data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction, or line coding, the means of mapping data onto a signal.

Compression is useful because it reduces the resources required to store and transmit data. Computational resources are consumed in the compression and decompression processes. Data compression is subject to a trade-off of space-time complexity. For example, a compression scheme for video may require expensive hardware to decompress the video fast enough to be viewed while it is being decompressed, and the option to decompress the entire video before watching it may be inconvenient or require additional storage. The design of data compression schemes involves trade-offs between various factors, including the degree of compression, the amount of distortion introduced (when using lossy data compression), and the computational resources required to compress and decompress the data.

The theoretical basis of compression is provided by information theory and, more specifically, by algorithmic information theory for lossless compression and rate distortion theory for lossy compression. These areas of study were essentially created by Claude Shannon, who published seminal papers on the subject in the late 1940s and early 1950s. Other topics associated with compression include coding theory and statistical inference.

Data compression can be seen as a special case of data differentiation. Data differentiation consists of producing a difference given a source and a target, while patching reproduces the target given a source and a difference. Since there is no separate source and target in data compression, one can consider data compression as data differentiation with empty source data, the compressed file corresponding to a difference from nothing. This is the same as considering absolute entropy (corresponding to data compression) as a special case of relative entropy (corresponding to data differentiation) with no source data.

The term differential compression is used to emphasise the connection of data differentiation.